Some Thoughts on Massive Data Clustering for Data Segmentation

Understanding the Basics

Massive data clustering refers to the process of grouping vast amounts of data—often in the scale of terabytes or petabytes—into meaningful clusters where similarities within groups are maximized and differences between groups are minimized. When applied to data segmentation, this technique divides large datasets into segments for easier analysis, such as customer groups in marketing or regions in image processing. Clustering is unsupervised, meaning it doesn't rely on labeled data, making it ideal for exploratory analysis in big data environments. Unlike traditional methods that work well on small datasets, massive clustering must handle scalability issues like high computational costs and memory constraints.

In essence, segmentation via clustering turns raw, unstructured big data into actionable insights. For instance, in customer segmentation, businesses can identify distinct user behaviors from transaction logs to tailor marketing strategies.

Key Challenges in Handling Massive Datasets

Working with massive data introduces several hurdles:

- Scalability: Standard algorithms like K-means can become inefficient as data size grows, leading to long processing times.

- Dimensionality: High-dimensional data (the "curse of dimensionality") can dilute distances between points, making clusters harder to detect.

- Noise and Outliers: Big data often contains irrelevant or erroneous entries that can skew results.

- Distributed Processing: Data may be spread across multiple nodes, requiring parallel computing frameworks.

These challenges necessitate adapted techniques that leverage distributed systems like Apache Spark or Hadoop to process data in parallel. For segmentation, ensuring segments are interpretable and stable across massive volumes is crucial, as unstable clusters could lead to poor decision-making.

Popular Techniques and Adaptations

Several clustering methods have been scaled for massive data segmentation:

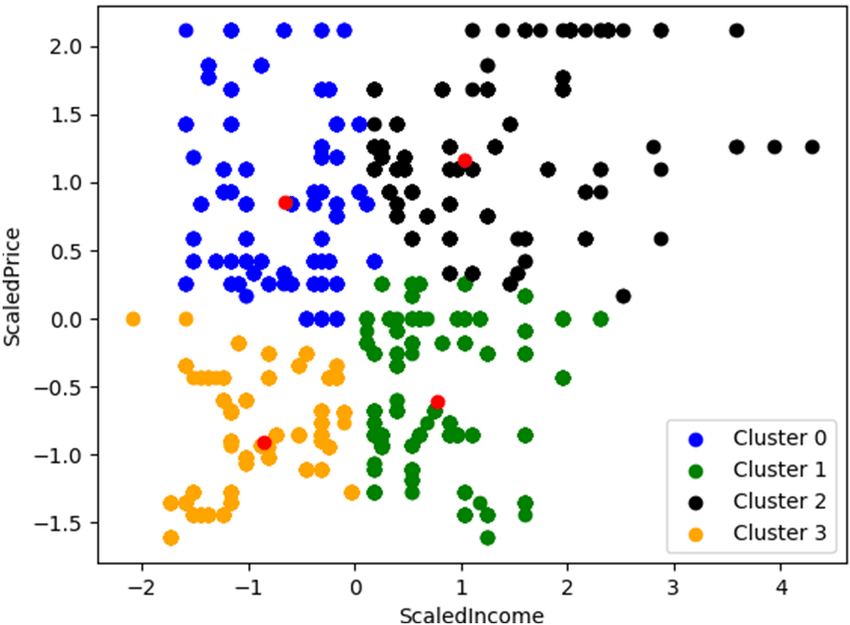

- Partition-Based Clustering (e.g., K-means): This divides data into K clusters by minimizing intra-cluster variance. For massive data, variants like Mini-Batch K-means or parallel K-means on Spark reduce computation time by processing subsets iteratively. It's great for customer segmentation where determining the optimal K via the elbow method is key.

- Hierarchical Clustering: Builds a tree of clusters (dendrogram) either bottom-up (agglomerative) or top-down (divisive). For big data, approximations like BIRCH (Balanced Iterative Reducing and Clustering using Hierarchies) handle large volumes by summarizing data into cluster features. This is useful in genomic data segmentation where nested structures matter.

- Density-Based Clustering (e.g., DBSCAN): Identifies clusters as dense regions separated by low-density areas, excelling at handling noise. Scaled versions like HDBSCAN or those integrated with Spark make it viable for massive datasets, such as segmenting geospatial data.

- Neural Network-Based Approaches: Recent advancements incorporate deep learning, like autoencoders for dimensionality reduction followed by clustering. This is particularly effective for high-dimensional massive data, as seen in studies on customer segmentation.

Other tools like K-Medoids (robust to outliers) and hybrid methods combining clustering workflows are gaining traction in 2025 workflows.

| Technique | Pros for Massive Data | Cons | Best for Segmentation In |

|---|---|---|---|

| K-means | Fast, scalable with mini-batches | Sensitive to initialization, assumes spherical clusters | Customer behavior analysis |

| Hierarchical | No need to specify K upfront | Computationally intensive for very large data | Hierarchical data like taxonomies |

| DBSCAN | Handles arbitrary shapes, robust to noise | Parameter-sensitive (epsilon, minPts) | Geospatial or anomaly detection |

| Neural-Based | Deals with high dimensions well | Requires training data, complex | Image or text segmentation |

Applications in Real-World Scenarios

- Marketing and Customer Segmentation: Clustering massive transaction data to create personalized campaigns, as explored in big data studies.

- Image and Video Processing: Segmenting pixels or frames in large multimedia datasets using density-based methods.

- Healthcare: Grouping patient records from electronic health systems for targeted treatments.

- Finance: Fraud detection by segmenting transaction patterns in real-time big data streams.

In all cases, the goal is to make massive data manageable by breaking it into segments that reveal patterns.

Future Directions and Considerations

As data volumes explode, future thoughts point toward quantum-inspired clustering for even faster processing or federated learning for privacy-preserving segmentation across distributed massive datasets. Integrating AI for automatic hyperparameter tuning could democratize these techniques. However, ethical concerns like bias in clusters (e.g., discriminatory segmentation) must be addressed through fair algorithms.

Overall, massive clustering for data segmentation is a powerhouse in data science, transforming chaos into clarity—but success hinges on choosing the right scalable technique for the job. If you'd like deeper dives into code examples, specific tools, or visuals like cluster diagrams, just say the word!

Comentários

Enviar um comentário